| Jun 07, 2024 |

With programmable pixels, novel sensor improves imaging of neural activity

(Nanowerk News) Neurons communicate electrically so to understand how they produce brain functions such as memory, neuroscientists must track how their voltage changes—sometimes subtly—on the timescale of milliseconds. In a new paper in Nature Communications ("Pixel-wise programmability enables dynamic high-SNR cameras for high-speed microscopy"), MIT researchers describe a novel image sensor with the capability to substantially increase that ability.

|

|

The invention led by Jie Zhang, a postdoctoral scholar in The Picower Institute for Learning and Memory lab of Sherman Fairchild Professor Matt Wilson, is a new take on the standard CMOS technology used in scientific imaging. In that standard approach, all pixels turn on and off at the same time—a configuration with an inherent trade-off in which fast sampling means capturing less light.

|

|

The new chip enables each pixel’s timing to be controlled individually. That arrangement provides a “best of both worlds” in which neighboring pixels can essentially complement each other to capture all the available light without sacrificing speed.

|

|

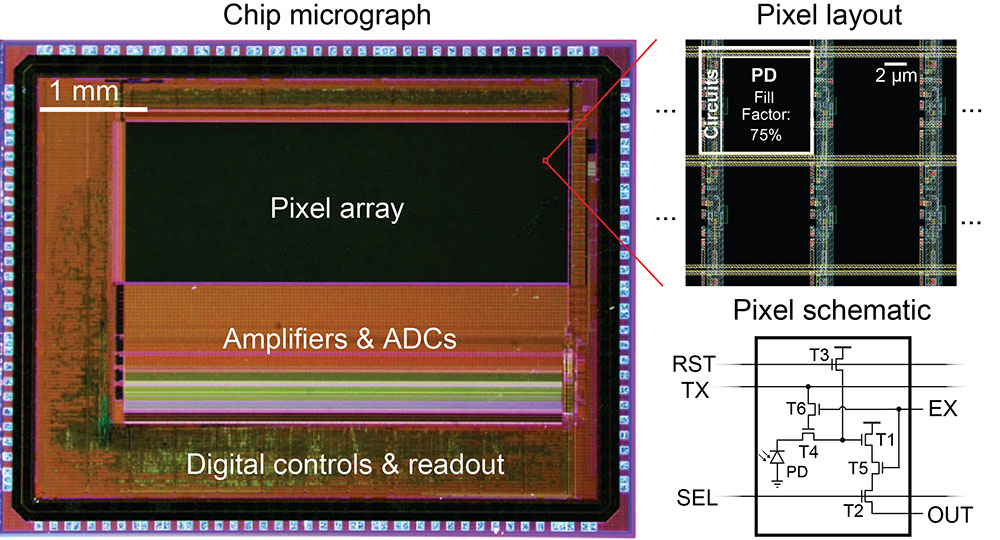

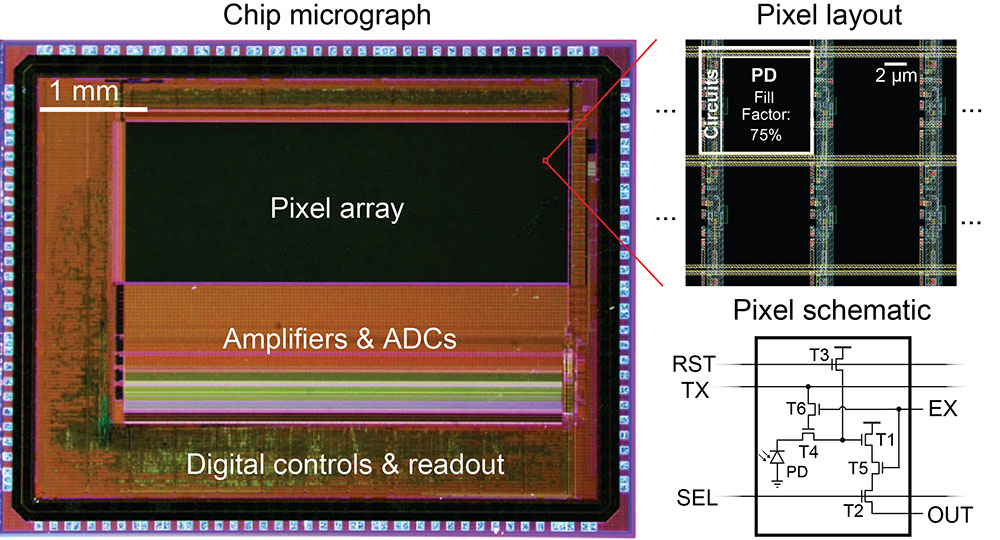

| In this image adapted from the figures, the left side shows the chip micrograph, while the right side displays the pixel layout and schematics, highlighting each circuit element. The new pixel circuit uses only two additional transistors (T5 and T6) compared to the conventional CMOS pixel. This minimalist design allows for independent programming of pixel exposures without sacrificing photodiode area to the circuits, ensuring high sensitivity under low light conditions. (Image: Jie Zhang, MIT Picower Institute)

|

|

In experiments described in the study, Zhang and Wilson’s team demonstrates how “pixelwise” programmability enabled them to improve visualization of neural voltage “spikes,” which are the signals neurons use to communicate with each other, and even the more subtle, momentary fluctuations in their voltage that constantly occur between those spiking events.

|

|

“Measuring with single-spike resolution is really important as part of our research approach,” said senior author Wilson, a Professor in MIT’s Departments of Biology and Brain and Cognitive Sciences (BCS), whose lab studies how the brain encodes and refines spatial memories both during wakeful exploration and during sleep. “Thinking about the encoding processes within the brain, single spikes and the timing of those spikes is important in understanding how the brain processes information.”

|

|

For decades Wilson has helped to drive innovations in the use of electrodes to tap into neural electrical signals in real-time, but like many researchers he has also sought visual readouts of electrical activity because they can highlight large areas of tissue and still show which exact neurons are electrically active at any given moment. Being able to identify which neurons are active can enable researchers to learn which types of neurons are participating in memory processes, providing important clues about how brain circuits work.

|

|

In recent years, neuroscientists including co-senior author Ed Boyden, Y. Eva Tan Professor of Neurotechnology in BCS and The McGovern Institute for Brain Research and a Picower Institute affiliate, have worked to meet that need by inventing “genetically encoded voltage indicators” (GEVIs), that make cells glow as their voltage changes in real-time. But as Zhang and Wilson have tried to employ GEVIs in their research, they’ve found that conventional CMOS image sensors were missing a lot of the action. If they operated too fast, they wouldn’t gather enough light. If they operated too slow, they’d miss rapid changes.

|

|

But image sensors have such fine resolution that many pixels are really looking at essentially the same place on the scale of a whole neuron, Wilson said. Recognizing that there was resolution to spare, Zhang applied his expertise in sensor design to invent an image sensor chip that would enable neighboring pixels to each have their own timing. Faster ones could capture rapid changes. Slower-working ones could gather more light. No action or photons would be missed. Zhang also cleverly engineered the required control electronics so they barely cut into the space available for light-sensitive elements on a pixels. This ensured the sensor’s high sensitivity under low light conditions, Zhang said.

|

Two demos

|

|

In the study the researchers demonstrated two ways in which the chip improved imaging of voltage activity of mouse hippocampus neurons cultured in a dish. They ran their sensor head-to-head against an industry standard scientific CMOS image sensor chip.

|

|

In the first set of experiments the team sought to image the fast dynamics of neural voltage. On the conventional CMOS chip, each pixel had a zippy 1.25 millisecond exposure time. On the pixel-wise sensor each pixel in neighboring groups of four stayed on for 5 milliseconds, but their start times were staggered so that each one turned on and off 1.25 seconds later than the next. In the study, the team shows that each pixel, because it was on longer, gathered more light but because each one was capturing a new view every 1.25 milliseconds, it was equivalent to simply having a fast temporal resolution.

|

|

The result was a doubling of the signal-to-noise ratio for the pixelwise chip. This achieves high temporal resolution at a fraction of the sampling rate compared to conventional CMOS chips, Zhang said.

|

|

Moreover, the pixelwise chip detected neural spiking activities that the conventional sensor missed. And when the researchers compared the performance of each kind of sensor against the electrical readings made with a traditional patch clamp electrode, they found that the staggered pixelwise measurements better matched that of the patch clamp.

|

|

In the second set of experiments, the team sought to demonstrate that the pixelwise chip could capture both the fast dynamics and also the slower, more subtle “subthreshold” voltage variances neurons exhibit. To do so they varied the exposure durations of neighboring pixels in the pixelwise chip, ranging from 15.4 milliseconds down to just 1.9 milliseconds. In this way, fast pixels sampled every quick change (albeit faintly), while slower pixels integrated enough light over time to track even subtle slower fluctuations. By integrating the data from each pixel, the chip was indeed able to capture both fast spiking and slower subthreshold changes, the researchers reported.

|

|

The experiments with small clusters of neurons in a dish was only a proof-of concept, Wilson said. His lab’s ultimate goal is to conduct brain-wide, real-time measurements of activity in distinct types of neurons in animals even as they are freely moving about and learning how to navigate mazes. The development of GEVIs and of image sensors like the pixelwise chip that can successfully take advantage of what they show is crucial to making that goal feasible.

|

|

“That’s the idea of everything we want to put together: large-scale voltage imaging of genetically tagged neurons in freely behaving animals,” Wilson said.

|

|

To achieve this, Zhang added, “We are already working on the next iteration of chips with lower noise, higher pixel counts, time-resolution of multiple kHz, and small form factors for imaging in freely behaving animals.”

|

|

The research is advancing pixel by pixel.

|